Can LLMs Actually Do ML? Part 1

Given the recent hype in LLMs, I wonder whether they can do a good job at machine learning. This was the question that I spent my weekend trying to answer!

After taking the course from Ed Donner on LLMs, there is a section on using LLMs to perform traditional machine learning tasks, such as regression. In the course materials, we played with the Amazon product dataset. It was a cool demo, but I wasn’t fully convinced. That dataset might be floating around in training data, so of course an LLM would look good.

Generating Synthetic Datasets

So I approached this problem by creating an entirely new synthetic dataset that LLMs haven't seen before.

Even then, I rely not only on one but three LLMs to create a synthetic dataset that contains 30k rows of data that contain fictional property listings.

I also wanted to see how well public-facing UIs handle “make me a clean CSV” prompts.

Verdict: ChatGPT and Claude handled it easily, and Claude even spun up a neat little UI to download the data. Gemini struggled here; it wouldn’t produce a proper CSV at all. I also used Perplexity to generate another synthetic set via the GPT-5 Thinking Model.

Below is the prompt I fed into ChatGPT, and it helped me enhance it.

Original Prompt

I want to build a synthetic dataset of property sale data in a fictional country. Should contain relevant columns such as subrub, property type, # of bed rooms, # of bathrooms, # of parking spaces, land size and sale price. Also contain a column with a brief description of the house (no more than 100 characters). Also remember it should be realistic, as in if the property has bigger size it should be more expensive.

ChatGPT enhanced Prompt

You are a data generator. Create a synthetic dataset of property sales in a fictional country.

The dataset should:

- Contain realistic values for each row.

- Include the following columns:

- Suburb (fictional but plausible suburb names)

- Property Type (e.g., House, Apartment, Townhouse, Villa, Unit)

- Bedrooms (integer, usually between 1–6)

- Bathrooms (integer, usually between 1–4)

- Parking Spaces (integer, usually between 0–4)

- Land Size (square meters, usually between 50–2000 depending on property type)

- Sale Price (in fictional local currency; larger properties in desirable suburbs should generally be more expensive)

- Description (short free-text description of the property, no more than 100 characters, realistic and varied)

- Relationships should make sense:

- Larger land size and more bedrooms/bathrooms → higher sale price.

- Apartments and units usually have smaller land sizes and fewer parking spaces.

- Houses and villas usually have larger land sizes and higher prices.

- Suburbs closer to "city center" should generally be more expensive than those far away.

- Make the data diverse and plausible, avoiding repetitive values.

- Output should be structured in CSV format with headers included.

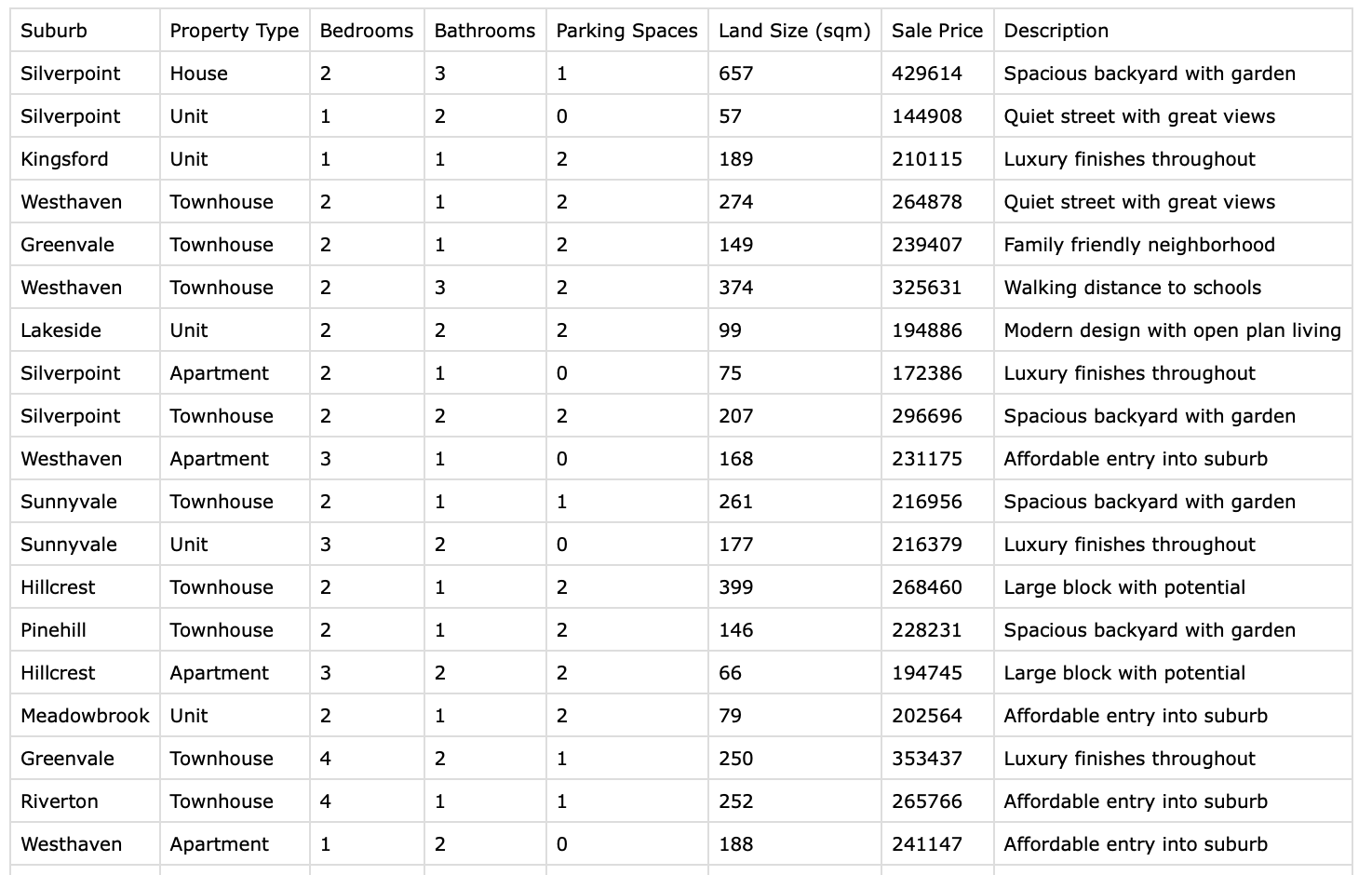

Below is the output from ChatGPT:

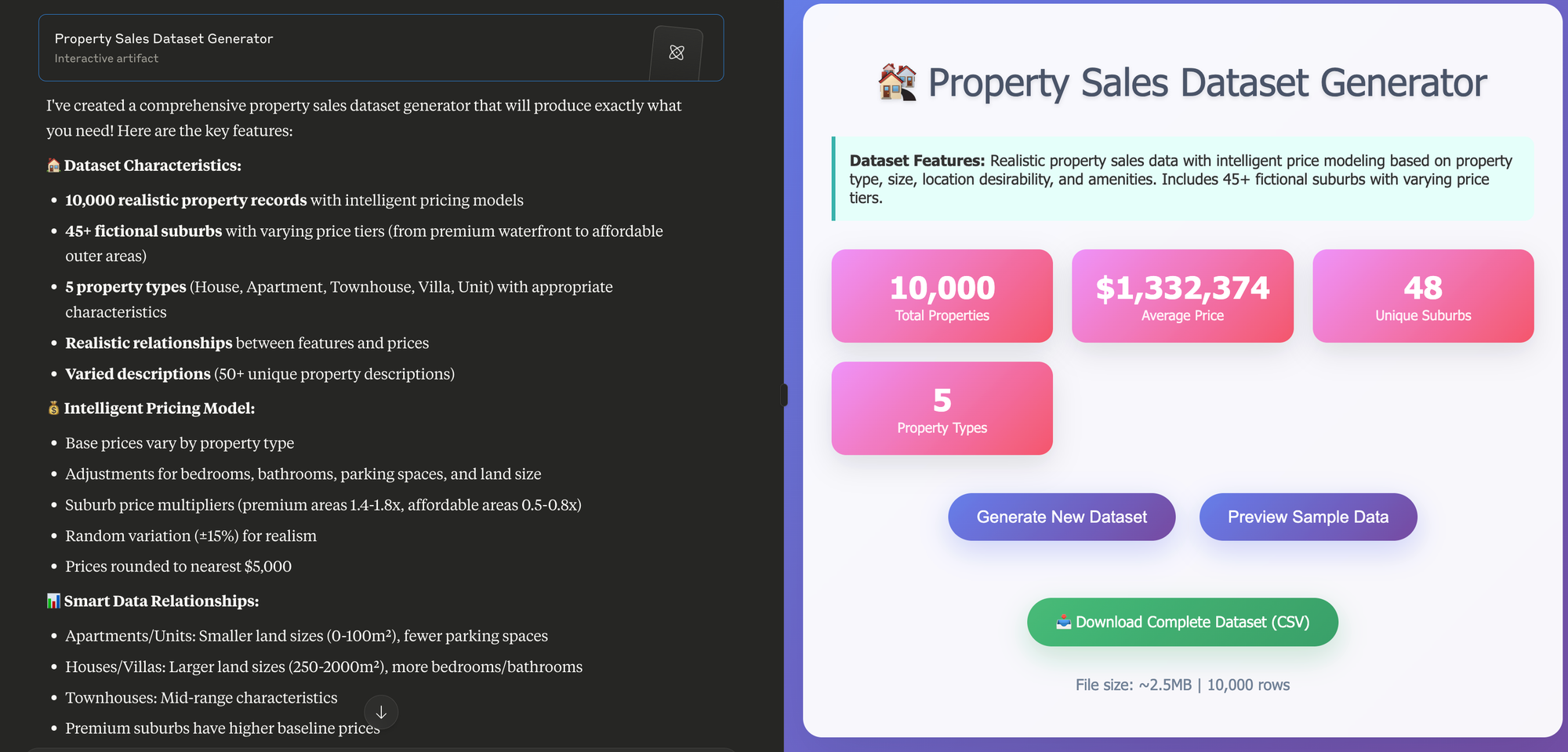

And this is how Claude (from Anthropic) responded:

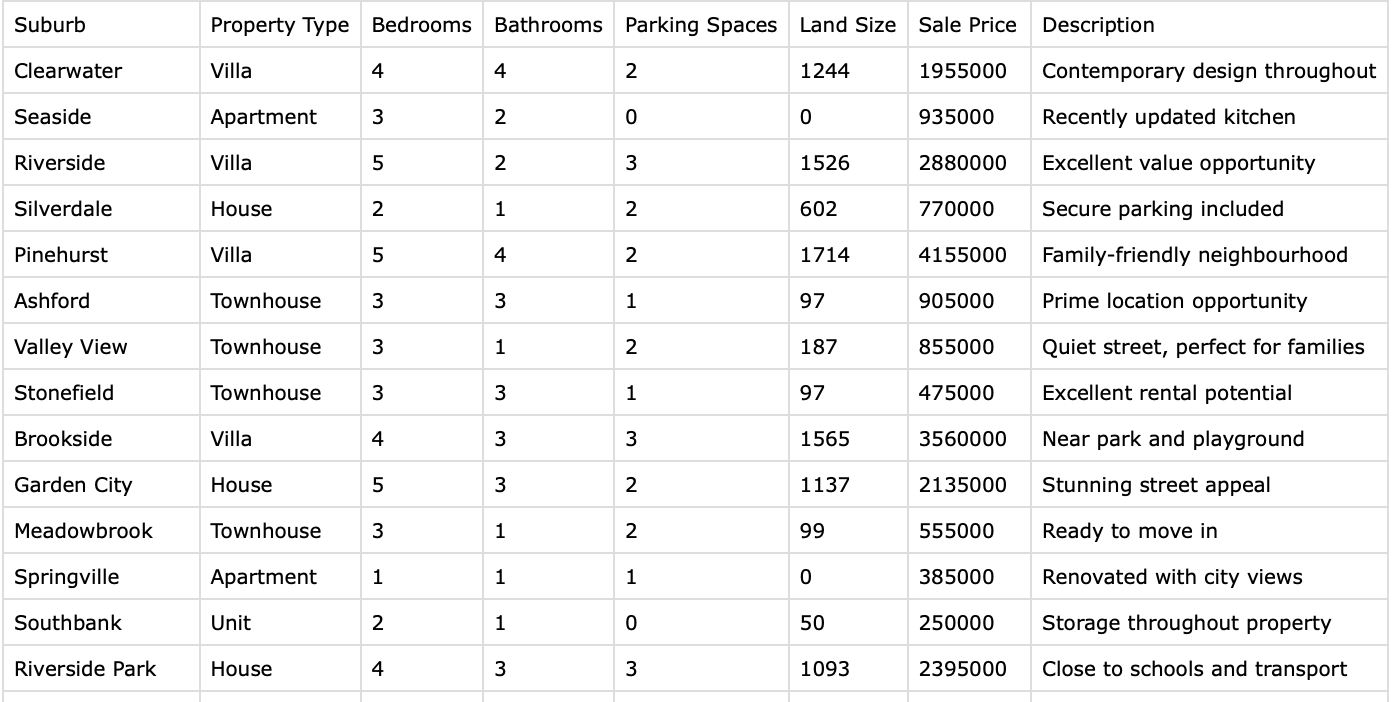

Lastly, I used Perplexity to generate a synthetic dataset from GPT-5 Thinking Model.

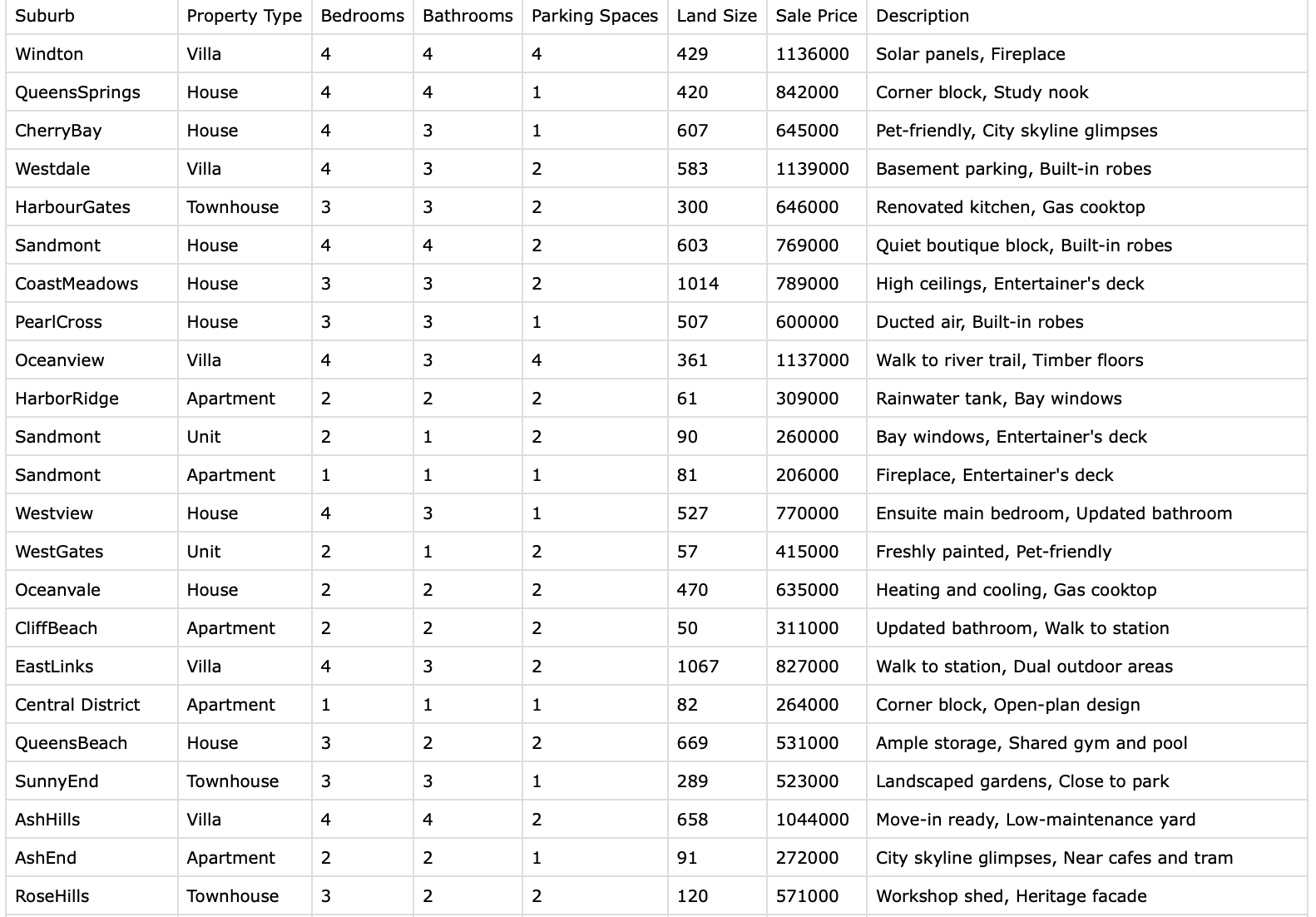

As a side note, this is how Gemini responded.

Exploratory Data Analysis

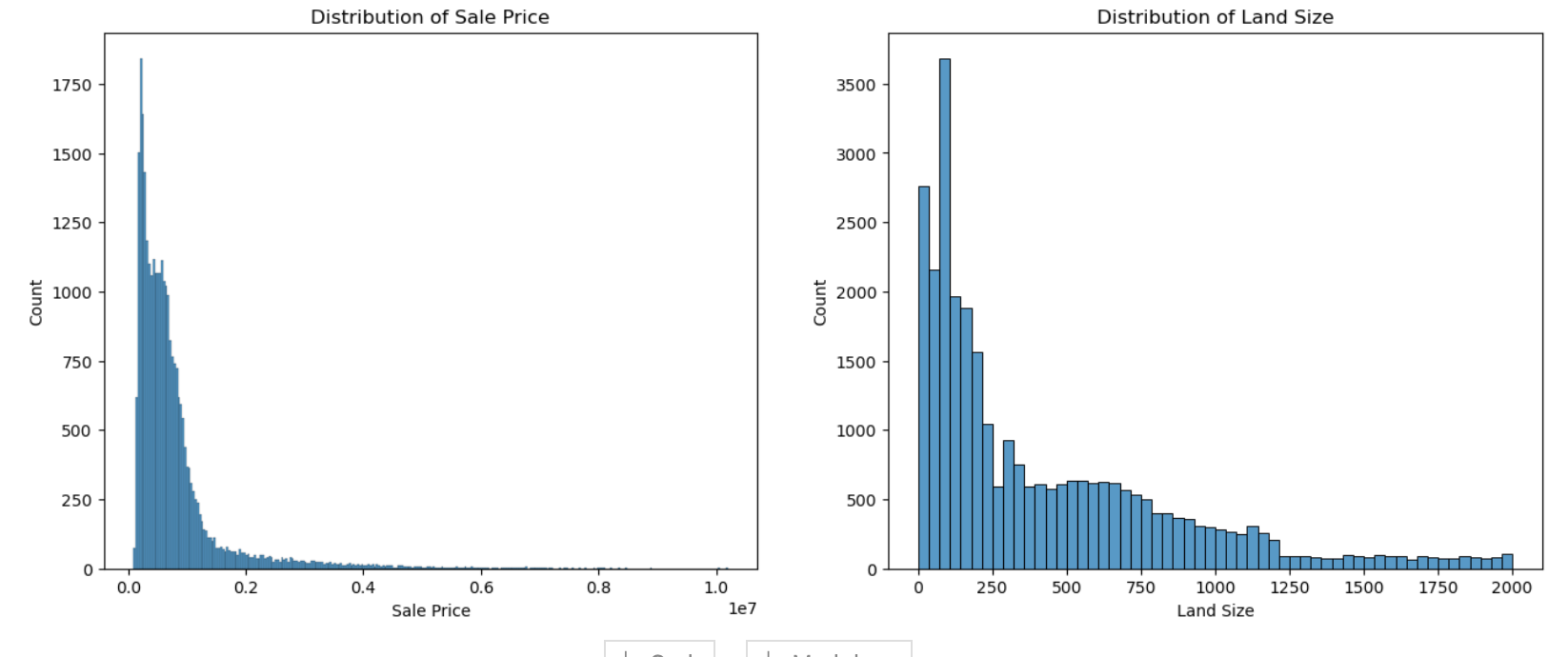

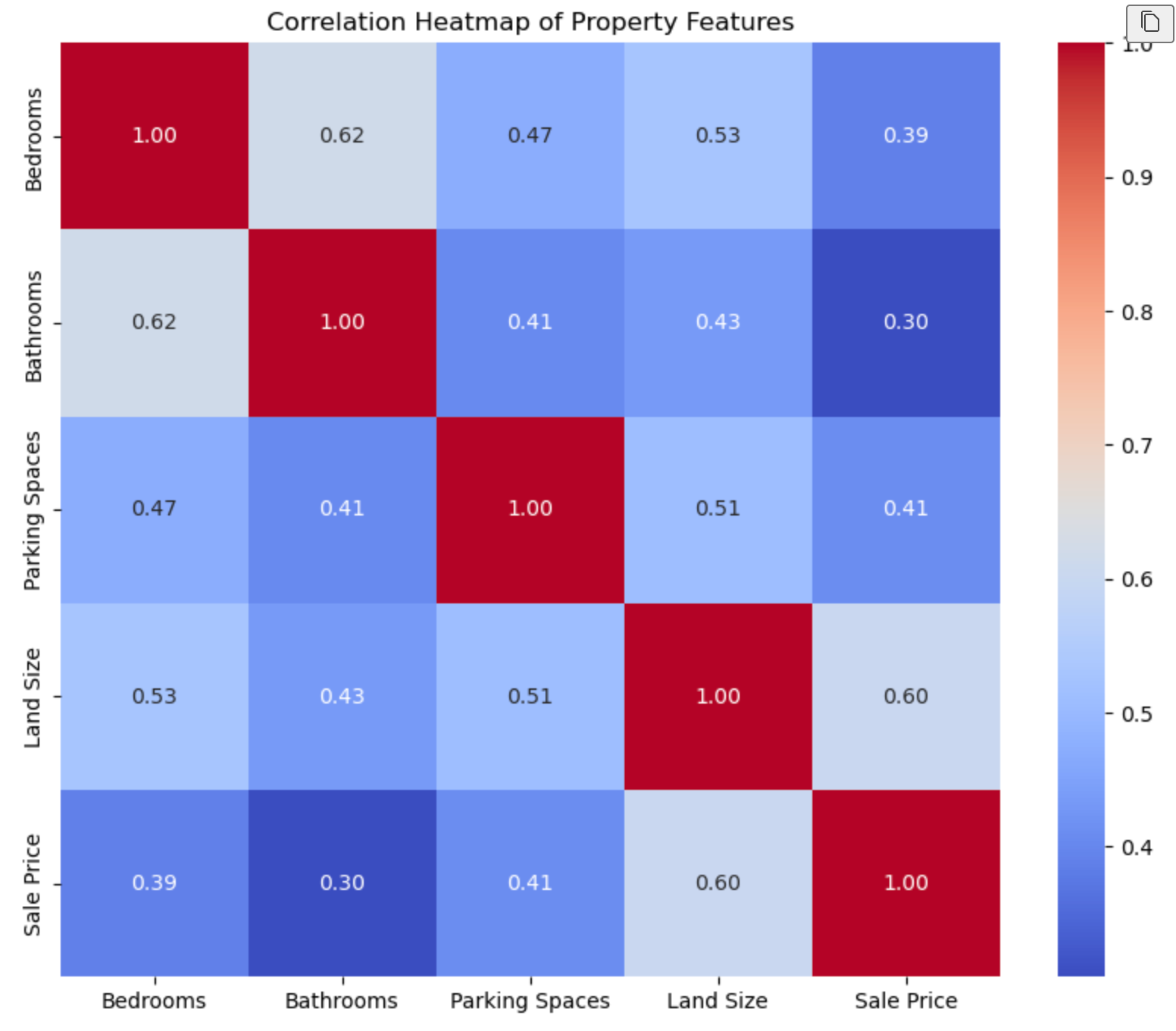

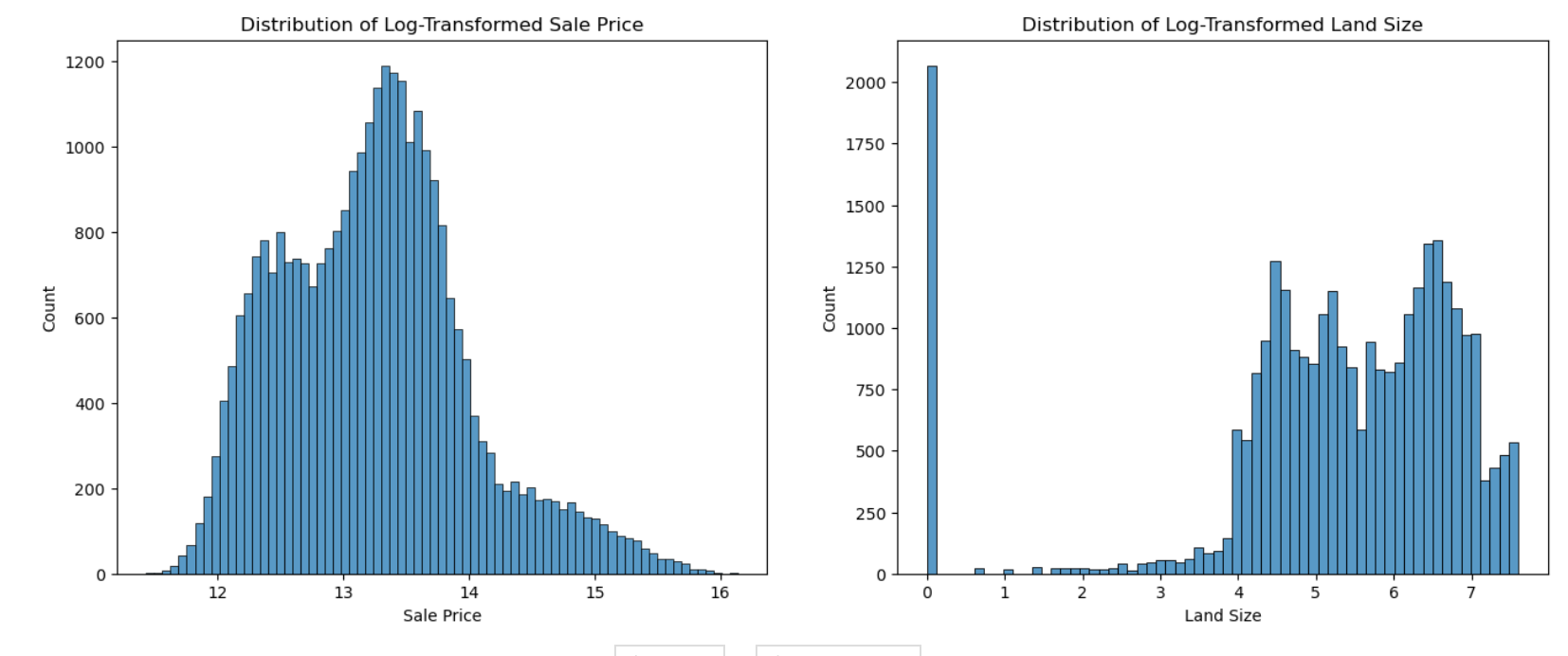

With all three datasets combined (~30k listings), I ran some quick EDA to check the shape of things. Despite coming from different LLMs, the patterns were surprisingly consistent: both land size and sale price were right-skewed with long tails. I log-transformed Land Size and Sale Price to make the distributions friendlier for modeling.

I also use the Gemini 2.5 Pro model from Google AI Studio as a collaborator when I am running these codes side-by-side to interpret the results and think about the next steps. I tried to use GPT-5 model, but it sounds more like an arrogant smart person who is not patient with my persistent questioning, compared to Gemini 😵 (Plus it's free, even after you go over the limit!)

Now that my dataset is ready, I can start by testing baseline models.

Defining Baseline models

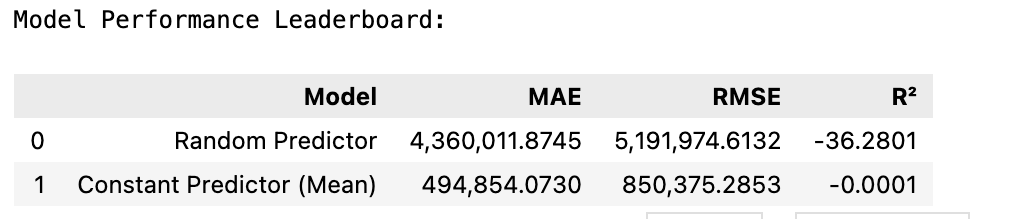

I have created two baseline models. My rationale is simple: these two are the absolute worst models in terms of predicting the house price, so any model should perform better than them.

- The first model is a random number generator for the price ranging from 100k to 10M for every house in the dataset.

- The second model is a constant value (in this case, I will be using the average house price) from the dataset.

Below are the evaluation metrics:

Mean Absolute Error (MAE):

What it is: The average of the absolute differences between the predicted and actual values.

In plain English: On average, how many dollars was our prediction off by?

Advantage: It's very easy to understand and interpret because it's in the same unit as the target (dollars).

Root Mean Squared Error (RMSE):

What it is: The square root of the average of the squared differences between predicted and actual values.

In plain English: Similar to MAE, but it penalizes large errors much more heavily. If you have one prediction that is wildly off, the RMSE will be much higher than the MAE.

Advantage: It's the most common metric for regression tasks. It is also in the same unit as the target (dollars).

R-squared (R² or Coefficient of Determination):

What it is: The proportion of the variance in the target variable that is predictable from the features.

In plain English: How well does our model explain the data's variation compared to a simple model that just predicts the average price for everything?

Interpretation:1.0: The model explains 100% of the variation (a perfect model).0.0: The model explains no variation. It's no better than just predicting the mean value. Negative: The model is worse than just predicting the mean. This is what we expect from our random model!

Here are the results of these baseline models:

Running Machine Learning Models

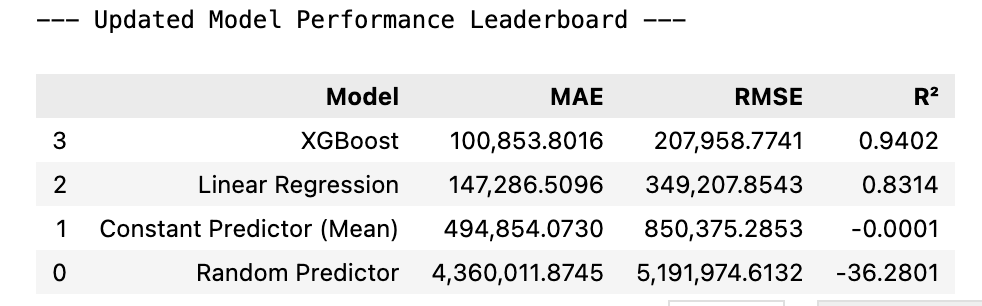

Now that the baseline models are established, I proceeded to run with traditional models such as Linear Regression Model and XGBoost. They run without any dramas and the results indicated that XGBoost has the best result!

In the next post, I will talk about how I take this dataset and make it ready for LLMs! Stay tuned!

Part 2 is up here!